Abnormal fastener detection model based on deep convolutional autoencoder with structural similarity

-

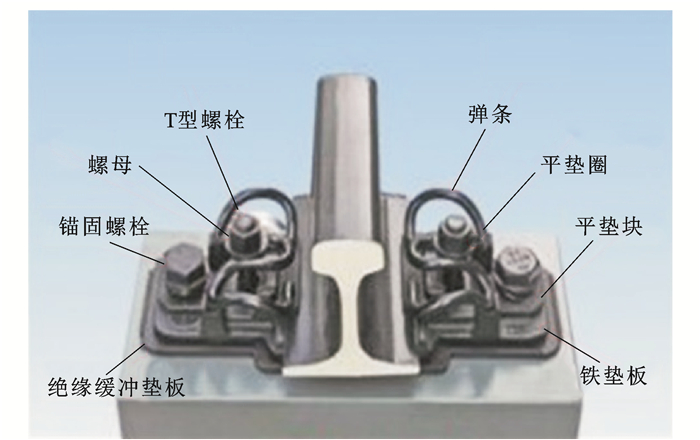

摘要: 介绍了轨道扣件系统的基本功能,概述了已有的轨道异常扣件检测技术,归纳了基于机器视觉的传统检测方法和深度学习方法所关注的问题及存在的不足; 介绍了自编码的基本思想与形式化过程,提出了一种基于编解码架构的异常扣件检测模型; 分析了传统像素级图像相似度评价指标的缺陷,实现了基于结构相似的损失函数和图像异常判定; 构建了轨道扣件图像数据集,验证了模型的性能; 将代表性的误报与漏报图像可视化,描述了这些图像的表观特征,分析了发生漏报与误报可能的原因。研究结果表明:结构化相似指标显著提升了模型的检测性能,与具有相同网络架构但使用平均绝对误差和均方误差作为相似度评价指标的检测模型相比,模型的F值分别提升了14.5%和16.2%;与其他对比模型相比,提出的模型取得了最高的检测精确率和F值,分别达到了98.6%和98.1%,与次优的RotNet模型相比分别提升了6.0%和9.8%;召回率为97.1%,略低于深度支持向量数据描述(DSVDD)模型的98.4%;整体上看,F值比所有对比模型均高出超过9%,提出的模型表现出了明显的性能优势。Abstract: The basic functions of rail fastener system were presented, the existing detection technologies for abnormal rail fasteners were outlined, and the concerns and shortcomings of machine vision-based traditional methods and deep learning methods were summarized. The basic idea and formalization process of autoencoder were explained, and an abnormal fastener detection model based on an encoding-decoding architecture was proposed. The drawbacks of traditional pixel-level image similarity metrics were analyzed, and the loss function and image abnormality were determined according to the structural similarity. A dataset of rail fastener images was built and utilized to verify the performance of the proposed model. The representative false-positive and false-negative images were visualized, and their appearance features were described, and the possible reasons for the occurrence of false positives and false negatives were analyzed. According to the research results, the detection performance of the proposed model is significantly enhanced by the structural similarity index. The F-value of the proposed model is 14.5% and 16.2%, respectively, higher than those of the models that have the same network architecture but use the mean absolute error and mean square error as the similarity metrics. The highest detection precision and F-value, as high as 98.6% and 98.1%, respectively, are achieved by the proposed model when it is compared with the other models under comparison. They are found to be 6% and 9.8%, respectively, higher than those of the second-best RotNet model. The recall of the proposed model is 97.1%, slightly lower than the 98.4% of the deep support vector data description (DSVDD) model. On the whole, an F-value over 9% higher than those of all the other models under comparison is achieved by the proposed model, representing a significant performance advantage of the proposed model.

-

表 1 网络参数设置

Table 1. Parameter setting of network

卷积核 激活函数 输出尺寸 尺寸 步长 填充 输入层 3×32×32 编码层1 64×4×4 2 1 f1(·) 64×16×16 编码层2 128×4×4 2 1 f2(·) 128×8×8 编码层3 256×4×4 2 1 f3(·) 256×4×4 编码层4 512×4×4 2 1 f4(·) 512×2×2 编码层5 64×4×4 2 1 64×1×1 解码层1 512×4×4 2 1 g1(·) 512×2×2 解码层2 256×4×4 2 1 g2(·) 256×4×4 解码层3 128×4×4 2 1 g3(·) 128×8×8 解码层4 64×4×4 2 1 g4(·) 64×16×16 解码层5 3×4×4 2 1 g5(·) 3×32×32 表 2 KNN模型在不同阈值下的性能

Table 2. Performances of KNN model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 71.7 99.8 83.5 μ+σ 88.5 86.0 87.2 μ+2σ 94.0 49.8 65.1 μ+3σ 96.3 23.7 38.1 表 3 DSVDD模型在不同阈值下的性能

Table 3. Performances of DSVDD model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 73.9 98.4 84.4 μ+σ 95.7 73.4 83.1 μ+2σ 99.4 43.9 60.9 μ+3σ 99.8 24.3 39.1 表 4 RotNet模型在不同阈值下的性能

Table 4. Performances of RotNet model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 92.6 84.2 88.3 μ+σ 96.5 68.3 80.0 μ+2σ 97.5 60.9 74.9 μ+3σ 98.0 54.9 70.4 表 5 DCAE(MAE)模型在不同阈值下的性能

Table 5. Performances of DCAE(MAE) model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 73.5 96.9 83.6 μ+σ 86.7 71.3 78.2 μ+2σ 91.0 40.5 56.1 μ+3σ 93.9 20.6 33.8 表 6 DCAE(MSE)模型在不同阈值下的性能

Table 6. Performances of DCAE(MSE) model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 75.4 89.7 81.9 μ+σ 86.3 54.7 67.0 μ+2σ 90.0 32.1 47.3 μ+3σ 92.3 18.8 31.3 表 7 DCAE(SSIM)模型在不同阈值下的性能

Table 7. Performances of DCAE(SSIM) model under different thresholds

阈值 精确率/% 召回率/% F值/% μ 75.5 100.0 86.1 μ+σ 91.1 100.0 95.3 μ+2σ 96.4 99.6 98.0 μ+3σ 98.6 97.7 98.1 表 8 不同模型的检测结果对比

Table 8. Comparison of test results of different models

模型 精确率/% 召回率/% F值/% KNN 88.5 86.0 87.2 DSVDD 73.9 98.4 84.4 RotNet 92.6 84.2 88.3 DCAE(MAE) 73.5 96.9 83.6 DCAE(MSE) 75.4 89.7 81.9 DCAE(SSIM) 98.6 97.7 98.1 -

[1] ZHAN Zhi-kun, SUN Hao, YU Xiao-dong, et al. Wireless rail fastener looseness detection based on MEMS accelerometer and vibration entropy[J]. IEEE Sensors Journal, 2020, 20(6): 3226-3234. doi: 10.1109/JSEN.2019.2955378 [2] YUAN Zhan-dong, ZHU Sheng-yang, YUAN Xuan-cheng, et al. Vibration-based damage detection of rail fastener clip using convolutional neural network: experiment and simulation[J]. Engineering Failure Analysis, 2021, 119: 104906. doi: 10.1016/j.engfailanal.2020.104906 [3] CHANDRAN P, RANTATALO M, ODELIUS J, et al. Train-based differential eddy current sensor system for rail fastener detection[J]. Measurement Science and Technology, 2019, 30(12): 125105. doi: 10.1088/1361-6501/ab2b24 [4] WEI Xiu-kun, YANG Zi-ming, LIU Yu-xin, et al. Railway track fastener defect detection based on image processing and deep learning techniques: a comparative study[J]. Engineering Applications of Artificial Intelligence, 2019, 80: 66-81. doi: 10.1016/j.engappai.2019.01.008 [5] JING Guo-qing, QIN Xuan-yang, WANG Hao-yu, et al. Developments, challenges, and perspectives of railway inspection robots[J]. Automation in Construction, 2022, 138: 104242. doi: 10.1016/j.autcon.2022.104242 [6] 张辉, 宋雅男, 王耀南, 等. 钢轨缺陷无损检测与评估技术综述[J]. 仪器仪表学报, 2019, 40(2): 11-25. https://www.cnki.com.cn/Article/CJFDTOTAL-YQXB201902002.htmZHANG Hui, SONG Ya-nan, WANG Yao-nan, et al. Review of rail defect non-destructive testing and evaluation[J]. Chinese Journal of Scientific Instrument, 2019, 40(2): 11-25. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-YQXB201902002.htm [7] 王平, 盛宏威, 冀凯伦, 等. 高速载运设施的无损检测技术应用和发展趋势[J]. 数据采集与处理, 2020, 35(2): 195-209. https://www.cnki.com.cn/Article/CJFDTOTAL-SJCJ202002002.htmWANG Ping, SHENG Hong-wei, JI Kai-lun, et al. Application and development trend of non-destructive testing technology for high-speed transportation facilities[J]. Journal of Data Acquisition and Processing, 2020, 35(2): 195-209. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-SJCJ202002002.htm [8] 刘甲甲, 熊鹰, 李柏林, 等. 基于计算机视觉的轨道扣件缺陷自动检测算法研究[J]. 铁道学报, 2016, 38(8): 73-80. doi: 10.3969/j.issn.1001-8360.2016.08.011LIU Jia-jia, XIONG Ying, LI Bai-lin, et al. Research on automatic inspection algorithm for railway fastener defects based on computer vision[J]. Journal of the China Railway Society, 2016, 38(8): 73-80. (in Chinese) doi: 10.3969/j.issn.1001-8360.2016.08.011 [9] FAN Hong, COSMAN P C, HOU Yun, et al. High-speed railway fastener detection based on a line local binary pattern[J]. IEEE Signal Processing Letters, 2018, 25(6): 788-792. doi: 10.1109/LSP.2018.2825947 [10] GIBERT X, PATEL V M, CHELLAPPA R. Robust fastener detection for autonomous visual railway track inspection[C]// IEEE. 2015 IEEE Winter Conference on Applications of Computer Vision. New York: IEEE, 2015: 694-701. [11] 代先星, 丁世海, 阳恩慧, 等. 铁路扣件弹条伤损自动检测系统研发与验证[J]. 铁道科学与工程学报, 2018, 15(10): 2478-2486. doi: 10.19713/j.cnki.43-1423/u.2018.10.004DAI Xian-xing, DING Shi-hai, YANG En-hui, et al. Development and verification of automatic inspection system for high-speed railway fastener[J]. Journal of Railway Science and Engineering, 2018, 15(10): 2478-2486. (in Chinese) doi: 10.19713/j.cnki.43-1423/u.2018.10.004 [12] FENG Hao, JIANG Zhi-guo, XIE Feng-ying, et al. Automatic f astener classification and defect detection in vision-based railway inspection systems[J]. IEEE Transactions on Instrumentation and Measurement, 2014, 63(4): 877-888. doi: 10.1109/TIM.2013.2283741 [13] 戴鹏, 王胜春, 杜馨瑜, 等. 基于半监督深度学习的无砟轨道扣件缺陷图像识别方法[J]. 中国铁道科学, 2018, 39(4): 43-49. doi: 10.3969/j.issn.1001-4632.2018.04.07DAI Peng, WANG Sheng-chun, DU Xin-yu, et al. Image recognition method for the fastener defect of ballastless track based on semi-supervised deep learning[J]. China Railway Science, 2018, 39(4): 43-49. (in Chinese) doi: 10.3969/j.issn.1001-4632.2018.04.07 [14] DONG Bang-yi, LI Qing-yong, WANG Jian-zhu, et al. An end-to-end abnormal fastener detection method based on data synthesis[C]//IEEE. 2019 IEEE 31st International Conference on Tools with Artificial Intelligence. New York: IEEE, 2019: 149-156. [15] MA Ning-ning, ZHANG Xiang-yu, ZHENG Hai-tao, et al. ShuffleNet v2: Practical guidelines for efficient CNN architecture design[C]//Springer. 15th European Conference on Computer Vision. Berlin: Springer, 2018: 116-131. [16] LIU Jun-bo, HUANG Ya-ping, ZOU Qi, et al. Learning visual similarity for inspecting defective railway fasteners[J]. IEEE Sensors Journal, 2019, 19(16): 6844-6857. doi: 10.1109/JSEN.2019.2911015 [17] 韦若禹, 李舒婷, 吴松荣, 等. 基于改进YOLO V3算法的轨道扣件缺陷检测[J]. 铁道标准设计, 2020, 64(12): 30-36. https://www.cnki.com.cn/Article/CJFDTOTAL-TDBS202012008.htmWEI Ruo-yu, LI Shu-ting, WU Song-rong, et al. Defect detection of track fastener based on improved YOLO V3 algorithm[J]. Railway Standard Design, 2020, 64(12): 30-36. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-TDBS202012008.htm [18] REDMON J, FARHADI A. YOLO v3: an incremental improvement[J]. arXiv, 2018, https://doi.org/10.48550/arXiv.1804.02767 [19] HSIEH C C, LIN Y W, TSAI L H, et al. Offline deep-learning-based defective track fastener detection and inspection system[J]. Sensors and Materials, 2020, 32(10): 3429-3442. doi: 10.18494/SAM.2020.2921 [20] ZHAN You, DAI Xian-xing, YANG En-hui, et al. Convolutional neural network for detecting railway fastener defects using a developed 3D laser system[J]. International Journal of Rail Transportation, 2021, 9(5): 424-444. doi: 10.1080/23248378.2020.1825128 [21] CUI Hao, LI Jian, HU Qing-wu, et al. Real-time inspection system for ballast railway fasteners based on point cloud deep learning[J]. IEEE Access, 2020, 8: 61604-61614. doi: 10.1109/ACCESS.2019.2961686 [22] RUMELHART D E, HINTON G E, WILLIAMS R J. Learning representations by back-propagating errors[J]. Nature, 1986, 323(6088): 533-536. [23] MAAS A L, HANNUN A Y, NG A Y. Rectifier nonlinearities improve neural network acoustic models[C]//ICML. 2013 International Conference on Machine Learning Workshop on Deep Learning for Audio, Speech and Language Processing. New York: ICML, 2013: 1-6. [24] KALMAN B L, KWASNY S C. Why tanh: choosing a sigmoidal function[C]//IEEE. 1992 International Joint Conference on Neural Networks. New York: IEEE, 1992: 578-581. [25] WANG Zhou, BOVIK A C, SHEIKH H R, et al. Image quality assessment: from error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600-612. [26] BERGMANN P, LOWE S, FAUSER M, et al. Improving unsupervised defect segmentation by applying structural similarity to autoencoders[C]//Springer. 2019 International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications. Berlin: Springer, 2019: 372-380. [27] KINGMA D P, BA J L. Adam: a method for stochastic optimization[C]//ICLR. 2015 International Conference on Learning Representations. La Jolla: ICLR, 2015: 1-15. [28] IOFFE S, SZEGEDY C. Batch normalization: accelerating deep network training by reducing internal covariate shift[C]// ICML. 2015 International Conference on Machine Learning. New York: ICML, 2015: 448-456. [29] COVER T, HART P. Nearest neighbor pattern classification[J]. IEEE Transactions on Information Theory, 1967, 13(1): 21-27. [30] RUFF L, VANDERMEULEN R, GOERNITZ N, et al. Deep one-class classification[C]//ICML. 2018 International Conference on Machine Learning. New York: ICML, 2018: 4393-4402. [31] KOMODAKIS N, GIDARIS S. Unsupervised representation learning by predicting image rotations[C]//ICLR. 2018 International Conference on Learning Representations. La Jolla: ICLR, 2018: 1-16. [32] HE Kai-ming, ZHANG Xiang-yu, REN Shao-qing, et al. Deep residual learning for image recognition[C]//IEEE. 2016 Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2016: 770-778. [33] PUKELSHEIM F. The three sigma rule[J]. The American Statistician, 1994, 48(2): 88-91. -

下载:

下载: