Classification method of running environment features for unmanned vehicle

-

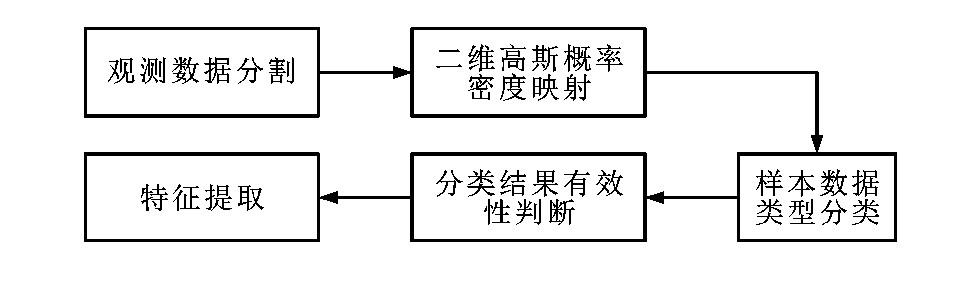

摘要: 为了提高车载2D激光雷达对城市环境障碍物的分类能力与环境地图创建精度和无人车自主行为决策的安全性与准确性, 提出了一种基于机器学习的环境特征分类方法。将2D激光雷达的观测数据帧分割为独立的数据段, 每个数据段中包含一个环境障碍实体; 在数据段的二维高斯概率密度空间中, 以概率密度的等高线椭圆轴长、对数似然值和最大概率密度作为人工神经网络的样本数据元素, 利用人工神经网络完成数据段分类; 利用人工神经网络输出值的权重对分类的有效性进行判定, 仅保留有效的环境特征, 并对分类完成的观测数据进行特征提取。计算结果表明: 在同一个试验场景中, 当分类有效性判定条件被设定为分类稳定区间为[0.55, 1], 分类过渡区间为[0.45, 0.55), 分类无效区间为[0, 0.45)的宽松条件时, 共识别出98个环境特征, 同一环境特征的多次观测数据的分类提取结果之间的最大标准差为30.7 mm, 多个环境特征的平均标准差为5.1mm; 当分类有效性判定条件设定为分类稳定区间为[0.65, 1], 分类过渡区间为[0.35, 0.65), 分类无效区间为[0, 0.35)的严格条件时, 共识别出93个环境特征, 同一环境特征的多次观测数据的分类提取结果之间的最大标准差为22.0mm, 多个环境特征的平均标准差为4.2mm, 因此, 提出的分类方法的噪声容忍能力强, 分类精度高。Abstract: In order to improve the barrier classification ability of mobile 2D LiDAR in urban environment, the creating accuracy of environmental map, and the safety and accuracy of autonomic behavior decision-making for unmanned vehicle, a classification method of environmental features based on machine learning was proposed.The data from 2D LiDAR were divided into independent data segments, and each data segment contains one environmental barrier.In 2D Gaussian probability density space of data segments, the elliptical axial lengths of contour lines, the log likelihood values and the maximum density were taken as the elements of sample data of artificial neural network, and the data segments were classified by the artificial neural network.The classification validity was estimated according to the weights of artificial neural network's output data to retain the effective environmental features, and the features were extracted from the classified data.Computational result shows that in the same test scenario, when the judging condition of classification validity is relaxed, under which the classification stability interval is [0.55, 1], the classification transition interval is [0.45, 0.55), and the classification invalid interval is [0, 0.45), 98 environmental features are extracted, the maximum standard deviation of classified extraction results for the multiple observation data of one environmental feature is 30.7 mm, and the average standard deviation for all features is 5.1 mm; when the judging condition of classification validity is strict, under which the classification stability interval is [0.65, 1], the classification transition interval is [0.35, 0.65), and the classification invalid interval is [0, 0.35), 93 environmental features are extracted, the maximum standard deviation of classified extraction results for multiple observation data of one environmental feature is 22.0 mm, and the average standard deviation for all features is 4.2 mm.Therefore, the proposed classification method has higher noise tolerance ability and classification accuracy.

-

表 1 分类结果

Table 1. Classification result

表 2 宽松条件下分类区间

Table 2. Classification intervals under relaxed condition

表 3 严格条件下分类区间

Table 3. Classification intervals under strict condition

表 4 混合样本分类区间

Table 4. Classification intervals of mixing samples

-

[1] NÚÑEZ P, VAÁZQUEZ-MARTÍN R, DEL TORO J C, et al. Feature extraction from laser scan data based on curvature estimation for mobile robotics[C]//IEEE. Proceedings of International Conference on Robotics and Automation. New York: IEEE, 2006: 1167-1172. [2] BORGES G A, ALDON M J. Line extraction in 2Drange images for mobile robotics[J]. Journal of Intelligent and Robotic Systems, 2004, 40(3): 267-297. doi: 10.1023/B:JINT.0000038945.55712.65 [3] DIOSI A, KLEEMAN L. Uncertainty of line segments extracted from static SICK PLS laser scans[C]//ARRA. Australasian Conference on Robotics and Automation. Brisbane: ARRA, 2003: 1-6. [4] LI Yang-ming, OLSON E B. Extracting general-purpose features from LiDAR data[C]//IEEE. 2010IEEE International Conference on Robotics and Automation. New York: IEEE, 2010, 1388-1393. [5] NGUYEN V, GÄCHTER S, MARTINELLI A, et al. A comparison of line extraction algorithms using 2Drange data for indoor mobile robotics[J]. Autonomous Robots, 2007, 23(2): 97-111. doi: 10.1007/s10514-007-9034-y [6] GUIVANT J E, MASSON F, NEBOT E. Simultaneous localization and map building using natural features and absolute information[J]. Robotics and Autonomous Systems, 2002, 40(2/3): 79-90. [7] LIU Yu-feng, THRUN S. Results for outdoor-SLAM using sparse extended information filters[C]//IEEE. 2003IEEE International Conference on Robotics and Automation. New York: IEEE, 2003: 1227-1233. [8] URAL S, SHAN J, ROMERO M A, et al. Road and roadside feature extraction using imagery and LiDAR data for transportation operation[J]. ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2015, 2(3): 239-246. [9] YU Yong-tao, LI J, GUAN Hai-yan, et al. Semiautomated extraction of street light poles from mobile LiDAR pointclouds[J]. IEEE Transactions on Geoscience and Remote Sensing, 2015, 53(3): 1374-1386. doi: 10.1109/TGRS.2014.2338915 [10] FERRAZ A, MALLET C, CHEHATA N. Large-scale road detection in forested mountainous areas using airborne topographic LiDAR data[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2016, 112: 23-36. doi: 10.1016/j.isprsjprs.2015.12.002 [11] ADAMS M D, KERSTENS A. Tracking naturally occurring indoor features in 2Dand 3D with LiDAR range/amplitude data[J]. International Journal of Robotics Research, 1998, 17(9): 907-923. doi: 10.1177/027836499801700901 [12] LIN Yang-bin, WANG Cheng, CHENG Jun, et al. Line segment extraction for large scale unorganized point clouds[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2015, 102: 172-183. doi: 10.1016/j.isprsjprs.2014.12.027 [13] ZHANG Sen, XIE Li-hua, ADAMS M D, et al. Feature extraction for outdoor mobile robot navigation based on a modified Gauss-Newton optimization approach[J]. Robotics and Autonomous Systems, 2006, 54(4): 277-287. doi: 10.1016/j.robot.2005.11.008 [14] ZHAO Yi-lu, CHEN Xiong. Prediction-based geometric feature extraction for 2Dlaser scanner[J]. Robotics and Autonomous Systems, 2011, 59(6): 402-409. doi: 10.1016/j.robot.2011.02.003 [15] ULAS C, TEMELTAS H. Plane-feature based 3Doutdoor SLAM with Gaussian filters[C]//IEEE. 2012International Conference on Vehicular Electronics and Safety. New York: IEEE, 2012: 13-18. [16] 李阳铭, 宋全军, 刘海, 等. 用于移动机器人导航的通用激光雷达特征提取[J]. 华中科技大学学报: 自然科学版, 2013, 41(增1): 280-283. https://www.cnki.com.cn/Article/CJFDTOTAL-HZLG2013S1071.htmLI Yang-ming, SONG Quan-jun, LIU Hai, et al. General purpose LiDAR feature extractor for mobile robot navigation[J]. Journal of Huazhong University of Science and Technology: Natural Science Edition, 2013, 41(S1): 280-283. (in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-HZLG2013S1071.htm [17] GUO Kun-yi, HOARE E G, JASTEH D, et al. Road edge recognition using the stripe Hough transform from millimeterwave radar images[J]. IEEE Transactions on Intelligent Transportation Systems, 2015, 16(2): 825-833. doi: 10.1109/TITS.2014.2342875 [18] FENG Y, SCHLICHTING A, BRENNER C. 3Dfeature point extraction from LiDAR data using a neural network[J]. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 2016, XLI-B1: 563-569. doi: 10.5194/isprs-archives-XLI-B1-563-2016 [19] KUSENBACH M, HIMMELSBACH M, WUENSCHE H J. A new geometric 3D LiDAR feature for model creation and classification of moving objects[C]//IEEE. 2016 IEEE Intelligent Vehicles Symposium(IV). New York: IEEE, 2016: 272-278. [20] YU Yong-tao, LI J, GUAN Hai-yan, et al. Learning hierarchical features for automated extraction of road markings from 3-D mobile LiDAR point clouds[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2015, 8(2): 709-726. doi: 10.1109/JSTARS.2014.2347276 [21] 刘占文, 赵祥模, 李强, 等. 基于图模型与卷积神经网络的交通标志识别方法[J]. 交通运输工程学报, 2016, 16(5): 122-131. http://transport.chd.edu.cn/article/id/201605014LIU Zhan-wen, ZHAO Xiang-mo, LI Qiang, et al. Traffic sign recognition method based on graphical model and convolutional neural network[J]. Journal of Traffic and Transportation Engineering, 2016, 16(5): 122-131. (in Chinese). http://transport.chd.edu.cn/article/id/201605014 [22] HATA A Y, WOLF D F. Feature detection for vehicle localization in urban environments using a multilayer LiDAR[J]. IEEE Transactions on Intelligent Transportation Systems, 2016, 17(2): 420-429. doi: 10.1109/TITS.2015.2477817 [23] WEIGEL H, LINDNER P, WANIELIK G. Vehicle tracking with lane assignment by camera and LiDAR sensor fusion[C]//IEEE. 2009IEEE Intelligent Vehicles Symposium. New York: IEEE, 2009: 513-520. [24] ABAYOWA B O, YILMAZ A, HARDIE R C. Automatic registration of optical aerial imagery to a LiDAR point cloud for generation of city models[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2015, 106: 68-81. doi: 10.1016/j.isprsjprs.2015.05.006 [25] CHENG Liang, WU Yang, WANG Yu, et al. Three-dimensional reconstruction of large multilayer interchange bridge using airborne LiDAR data[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2015, 8(2): 691-708. doi: 10.1109/JSTARS.2014.2363463 -

下载:

下载: