Hand gesture recognition method in driver's phone-call behavior based on decision fusion of image features

-

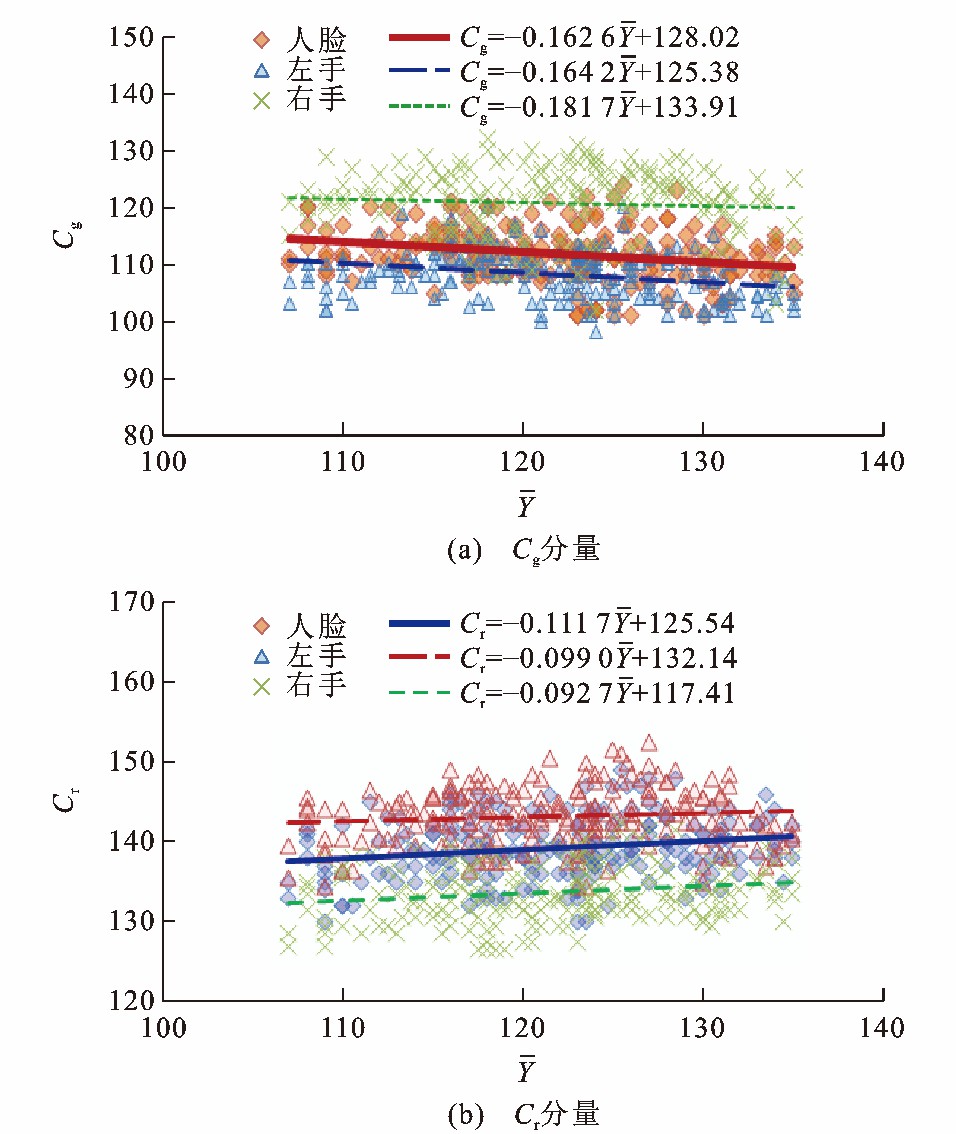

摘要: 为鲁棒检测自然环境中驾驶人的通话行为, 提出了一种驾驶人手机通话手势的识别方法。运用Adaboost算法检测驾驶人面部区域, 在YCgCr色彩空间中分别对面部肤色亮度分量和色度分量进行稀疏网格间隔采样, 由此建立了肤色的高斯分布模型; 针对驾驶室光照强度的不均匀性, 提出了肤色分量的漂移补偿算法, 建立了适应光照变化的在线肤色模型, 以准确分割左右手部肤色区域; 运用HOG算法获取手部肤色区域的2 376维HOG特征向量, 运用PCA方法将HOG特征降至400维; 同时提取手部肤色区域的PZMs特征, 并采用Relief算法筛选出权重最大的8个PZMs特征向量, 建立了融合PCA-HOG特征和Relief-PZMs特征的通话手势支持向量机分类决策。试验结果表明: 基于PCA-HOG特征的手势识别率为93.1%, 对光照变化的鲁棒性较好, 但易受到手部与头部转动的干扰; 基于Relief-PZMs特征的手势识别率为91.9%, 对于头部与手部姿态的耐受度较好, 但光照鲁棒性较差; 基于PCA-HOG和Relief-PZMs多元特征融合方法的手势识别率达到94.5%, 对光照波动、手部与头部转动等干扰条件具有较好的适应性。Abstract: In order to detect drivers' phone-call behavior robustly in natural environment, a hand gesture recognition method was proposed. The Adaboost algorithm was used to detect driver's face region. In YCgCr color space, the brightness component and chroma component of facial skin were sampled by sparse grid, respectively, and a Gaussian distribution model of skin color was built. Considering the inhomogeneity of cab illumination, a skin color component drift compensation algorithm was proposed, and an online skin color model was established to adapt the changes of illumination, so that the skin color regions of right and left hands can be accurately segmented. The 2 376 dimensions HOG feature vector of hand skin region was extracted by HOG algorithm, and then PCA method was used to reduce HOG feature vector to 400 dimensions. Meanwhile, the PZMs features of hand skin region were extracted and 8 PZMs feature vectors with the largest weights were screened out by Relief algorithm. A support vector machine classifier decision for phone-call hand gesture was established based on the PCA-HOG and Relief-PZMs features. Experimental result shows that the hand gesture recognition rate based on the PCA-HOG features is 93.1%, and it has good robust to illumination changes but is easily disturbed by hand and head rotation. The hand gesture recognition rate based on the Relief-PZMs features is 91.9%, and it has good tolerance to head and hand gestures but has poor illumination robustness. The hand gesture recognition rate of the proposed multi-feature-fusion method combined with the PCA-HOG and Relief-PZMs is up to 94.5%, and it has good adaptability to illumination fluctuate, hand and head rotation, and other interference conditions.

-

表 1 特征权重分配

Table 1. Distribution of characteristic weights

特征权重 分类模型 Relief-PZMs-SVM PCA-HOG-SVM 阳性权重 396 419 阳性权重归一化结果 0.486 0.514 阴性权重 267 308 阴性权重归一化结果 0.464 0.536 表 2 算法性能比较

Table 2. Performance comparison of algorithms

算法 正检率/% 误检率/% 特征提取耗时/ms 总耗时/ms 加权Hu矩 82.2 12.4 63.9 112.4 PCA-SIFT 84.0 10.7 79.0 139.2 HOG 91.5 5.1 821.0 905.3 PCA-HOG 93.1 3.7 98.5 152.0 Zernike矩 90.5 5.5 152.1 207.7 PZMs 91.2 5.4 158.6 225.0 Relief-PZMs 91.9 3.8 62.3 119.8 本文算法 94.5 3.0 124.4 179.6 -

[1] WHITE K M, HYDE M K, WALSH M P, et al. Mobile phone use while driving: an investigation of the beliefs influencing drivers' hands-free and hand-held mobile phone use[J]. Transportation Research Part F: Traffic Psychology and Behavior, 2010, 13 (1): 9-20. doi: 10.1016/j.trf.2009.09.004 [2] 隋毅. 基于驾驶模拟实验的手机通话对驾驶安全的影响研究[D]. 北京: 北京交通大学, 2013.SUI Yi. Influence of cell phone use on driving safety base on driving simulator experiments[D]. Beijing: Beijing Jiaotong University, 2013. (in Chinese). [3] ABDUL SHABEER H, WAHIDABANU R S D. Cell phone accident avoidance system while driving[J]. International Journal of Soft Computing and Engineering, 2011, 1 (4): 144-147. [4] RODRIGUEZ-ASCARIZ J M, BOQUETE L, CANTOS J, et al. Automatic system for detecting driver use of mobile phones[J]. Transportation Research Part C: Emerging Technologies, 2011, 19 (4): 673-681. doi: 10.1016/j.trc.2010.12.002 [5] 张波, 王文军, 魏民国, 等. 基于机器视觉的驾驶人使用手持电话行为检测[J]. 吉林大学学报(工学版), 2015, 45 (5): 1688-1695. https://www.cnki.com.cn/Article/CJFDTOTAL-JLGY201505044.htmZHANG Bo, WANG Wen-jun, WEI Min-guo, et al. Detection handheld phone use by driver based on machine vision[J]. Journal of Jilin University (Engineering and Technology Edition), 2015, 45 (5): 1688-1695. (in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-JLGY201505044.htm [6] WANG Dan, PEI Ming-tao, ZHU Lan. Detecting driver use of mobile phone based on in-car camera[C]//IEEE. 10th International Conference on Computational Intelligence and Security. New York: IEEE, 2014: 148-151. [7] ZHAO Chi-liang, GAO Yong-sheng, HE Jie, et al. Recognition of driving postures by multiwavelet transform and multilayer perceptron classifier[J]. Engineering Applications of Artificial Intelligence, 2012, 25 (8): 1677-1686. doi: 10.1016/j.engappai.2012.09.018 [8] STERGIOPOULOU E, SGOUROPOULOS K, NIKOLAOU N, et al. Real time hand detection in a complex background[J]. Engineering Applications of Artificial Intelligence, 2014, 35: 54-70. doi: 10.1016/j.engappai.2014.06.006 [9] BAN Y, KIM S K, KIM S, et al. Face detection based on skin color likelihood[J]. Pattern Recognition, 2014, 47 (4): 1573-1585. doi: 10.1016/j.patcog.2013.11.005 [10] KHAN R, HANBURY A, STÖTTINGER J, et al. Color based skin classification[J]. Pattern Recognition Letters, 2012, 33 (2): 157-163. doi: 10.1016/j.patrec.2011.09.032 [11] 孙瑾, 丁永晖, 周来. 融合红外深度信息的视觉交互手部跟踪算法[J]. 光学学报, 2017, 37 (1): 0115002-1-11. https://www.cnki.com.cn/Article/CJFDTOTAL-GXXB201701029.htmSUN Jin, DING Yong-hui, ZHOU Lai. Visually interactive hand tracking algorithm combined with infrared depth information[J]. Acta Optica Sinica, 2017, 37 (1): 0115002-1-11. (in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-GXXB201701029.htm [12] SESHADRI K, JUEFEI-XU F, PAL D K, et al. Driver cell phone usage detection on Strategic Highway Research Program (SHRP2) face view videos[C]//IEEE. IEEE Conference on Computer Vision and Pattern Recognition Workshops. New York: IEEE, 2015: 35-43. [13] LIU Yun, YIN Yan-min, ZHANG Shu-jun. Hand gesture recognition based on Hu moments in interaction of virtual reality[C]//IEEE. 2012 4th International Conference on Intelligent Human-Machine Systems and Cybernetics. New York: IEEE, 2012: 145-148. [14] ARTAN Y, BULAN O, LOCE R P, et al. Driver cell phone usage detection from HOV/HOT NIR images[C]//IEEE. 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops. New York: IEEE, 2014: 225-230. [15] 张汗灵, 李红英, 周敏. 融合多特征和压缩感知的手势识别[J]. 湖南大学学报(自然科学版), 2013, 40 (3): 87-92. doi: 10.3969/j.issn.1674-2974.2013.03.016ZHANG Han-ling, LI Hong-ying, ZHOU Min. Hand posture recognition based on multi-feature and compressive sensing[J]. Journal of Hunan University (Natural Sciences), 2013, 40 (3): 87-92. (in Chinese). doi: 10.3969/j.issn.1674-2974.2013.03.016 [16] CHAE Y N, HAN T, SEO Y H, et al. An efficient face detection based on color-filtering and its application to smart devices[J]. Multimedia Tools and Applications, 2016, 75 (9): 4867-4886. doi: 10.1007/s11042-013-1786-0 [17] KALIRAJ K, MANIMARAN S. Robust skin color-based moving object detection for video surveillance[J]. Journal of Electronic Imaging, 2016, 25 (4): 043007-1-8. doi: 10.1117/1.JEI.25.4.043007 [18] DIOS J J D, GARCIA N. Face detection based on a new color space YCgCr[C]//IEEE. 2003 International Conference on Image Processing. New York: IEEE, 2003: 909-912. [19] 程文冬, 付锐, 袁伟, 等. 驾驶人注意力分散的图像检测与分级预警[J]. 计算机辅助设计与图形学学报, 2016, 28 (8): 1287-1296. doi: 10.3969/j.issn.1003-9775.2016.08.010CHENG Wen-dong, FU Rui, YUAN Wei, et al. Driver attention distraction detection and hierarchical prewarning based on machine vision[J]. Journal of Computer-Aided Design and Computer Graphics, 2016, 28 (8): 1287-1296. (in Chinese). doi: 10.3969/j.issn.1003-9775.2016.08.010 [20] 梁敏健, 崔啸宇, 宋青松, 等. 基于HOG-Gabor特征融合与Softmax分类器的交通标志识别方法[J]. 交通运输工程学报, 2017, 17 (3): 151-158. doi: 10.3969/j.issn.1671-1637.2017.03.016LIANG Min-jian, CUI Xiao-yu, SONG Qing-song, et al. Traffic sign recognition method based on HOG-Gabor feature fusion and Softmax classifier[J]. Journal of Traffic and Transportation Engineering, 2017, 17 (3): 151-158. (in Chinese). doi: 10.3969/j.issn.1671-1637.2017.03.016 [21] ZHENG Jin-qing, FENG Zhi-yong, XU Chao, et al. Fusing shape and spatio-temporal features for depth-based dynamic hand gesture recognition[J]. Multimedia Tools and Applications, 2017, 76 (20): 20525-20544. doi: 10.1007/s11042-016-3988-8 [22] SAVAKIS A, SHARMA R, KUMAR M. Efficient eye detection using HOG-PCA descriptor[C]//SPIE. Imaging and Multimedia Analytics in a Web and Mobile World 2014. Bellingham: SPIE, 2014: 1-8. [23] WOLD S, ESBENSEN K, GELADI P. Principal component analysis[J]. Chemometrics and Intelligent Laboratory Systems, 1987, 2 (1-3): 37-52. doi: 10.1016/0169-7439(87)80084-9 [24] DENG An-wen, GWO Chih-ying. Fast and stable algorithms for high-order Pseudo Zernike moments and image reconstruction[J]. Applied Mathematics and Computation, 2018, 334: 239-253. doi: 10.1016/j.amc.2018.04.001 [25] BERA A, KLESK P, SYCHEL D. Constant-time calculation of Zernike moments for detection with rotational invariance[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 41 (3): 537-551. [26] JIA Jian-hua, YANG Ning, ZHANG Chao, et al. Object-oriented feature selection of high spatial resolution images using an improved Relief algorithm[J]. Mathematical and Computer Modelling, 2013, 58 (3/4): 619-626. [27] BURGES C J C. A tutorial on support vector machines for pattern recognition[J]. Data Mining and Knowledge Discovery, 1998, 2: 121-167. doi: 10.1023/A:1009715923555 [28] 秦华标, 李雪梅, 仝锡民, 等. 复杂环境下基于多特征决策融合的眼睛状态识别[J]. 光电子·激光, 2014, 25 (4): 777-783. https://www.cnki.com.cn/Article/CJFDTOTAL-GDZJ201404026.htmQIN Hua-biao, LI Xue-mei, TONG Xi-min, et al. Eye state recognition in complex environment based on multi-feature decision fusion[J]. Journal of Optoelectronics·Laser, 2014, 25 (4): 777-783. (in Chinese). https://www.cnki.com.cn/Article/CJFDTOTAL-GDZJ201404026.htm [29] SUN Ya-xin, WEN Gui-hua, WANG Jia-bing. Weighted spectral features based on local Hu moments for speech emotion recognition[J]. Biomedical Signal Processing and Control, 2015, 18: 80-90. doi: 10.1016/j.bspc.2014.10.008 [30] 聂隐愚, 唐兆, 常建, 等. 基于单目图像的列车事故场景三维重建[J]. 交通运输工程学报, 2017, 17 (1): 149-158. http://transport.chd.edu.cn/article/id/201701017NIE Yin-yu, TANG Zhao, CHANG Jian, et al. 3D reconstruction of train accident scene based on monocular image[J]. Journal of Traffic and Transportation Engineering, 2017, 17 (1): 149-158. (in Chinese). http://transport.chd.edu.cn/article/id/201701017 [31] LUO J, GWUN O. A comparison of SIFT, PCA-SIFT and SURF[J]. International Journal of Image Processing, 2009, 3 (4): 1-10. -

下载:

下载: