In-vehicle image technology for identifying faults of pantograph

-

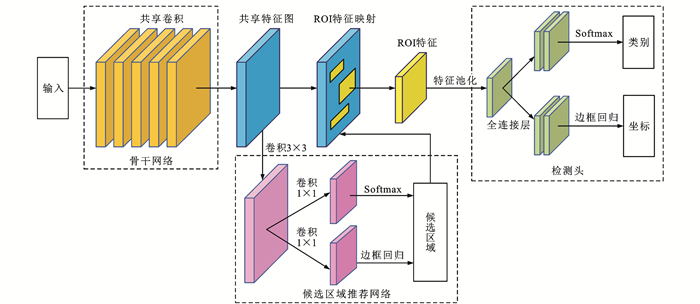

摘要: 针对列车在途中因受电弓发生故障而影响运行安全的问题,提出了一种受电弓故障的车载图像识别技术,以实时检测受电弓降弓、变形与毁坏,碳滑板异常磨耗与缺口,弓角变形与缺失故障;基于更快速的区域卷积神经网络(Faster R-CNN)目标检测框架设计了弓头图像定位目标检测模型,利用残差网络代替原有卷积网络,利用特征金字塔多尺度预测结构构建了候选区域推荐网络,以精准、快速地进行弓头定位和状态检侧;基于掩码区域卷积神经网络(Mask R-CNN)实例分割框架设计了弓头图像分割模型,并针对性地重新设计了检测头的网络结构与特征图尺寸,以适应受电弓的细长弯曲特征,从而准确、快速分割弓头图像;为了在分割后的二值图中更快速地识别与定位故障,根据受电弓结构尺寸和图像分割模型输出的位置坐标,制定了弓角与碳滑板故障的快速模板匹配策略,并在此基础上编制了详细的故障检测算法与程序。研究结果表明:在相应的数据集上,弓头图像定位目标检测模型的平均检测精度为0.944,平均每帧检测时间为0.029 s,弓头图像分割模型的平均分割精度为0.967,平均每帧检测时间为0.031 s,模板匹配的检测精度为0.985,平均每帧检测时间为0.005 s,故障检测算法的平均检测精度为0.966,平均每帧检测时间为0.051 s。由此可见,提出的检测算法具备了较高的可靠性和实时性。Abstract: In view of the problem that the operation safety of the train was affected by pantograph faults, an in-vehicle image technology for identifying pantograph faults was proposed to detect the dropping, deformation and destruction of pantograph, the abnormal wear and notch of carbon contact strip, and deformation and loss faults of pantograph horns in real time. Based on the faster region-convolutional neural network (Faster R-CNN) target detection framework, a target detection model for locating the pantograph bow images was designed, and the residual network was used to replace the original convolutional network. The candidate region recommendation network was constructed by using the feature pyramid multi-scale prediction structure, so as to accurately and quickly locate the pantograph bow and detect the status. Based on the mask region-convolutional neural network (Mask R-CNN) instance segmentation framework, a pantograph bow image segmentation model was designed, and the network structure and feature map size of the detection head were redesigned to adapt to the slender and curved features of the pantograph, so as to accurately and quickly segment the pantograph bow image. In order to identify and locate faults more quickly in the segmented binary image, a rapid template matching strategy for the faults of pantograph horn and carbon contact strip was formulated according to the pantograph structure size and the position coordinates output by the image segmentation model. On this basis, detailed fault detection algorithms and procedures were compiled. Research results show that on the corresponding dataset, the average detection accuracy and average detection time per frame of the target detection model for positioning the pantograph bow images are 0.944 and 0.029 s, respectively. The average segmentation accuracy and average detection time per frame of the pantograph bow image segmentation model are 0.967 and 0.031 s, respectively. In addition, the detection accuracy and average detection time per frame of the template matching are 0.985 and 0.005 s, respectively. The average detection accuracy and average detection time per frame of the fault detection algorithm are 0.966 and 0.051 s, respectively. Thus, the proposed detection algorithm has high reliability and real-time performance.

-

Key words:

- vehicle engineering /

- pantograph /

- fault detection /

- deep learning /

- target detection /

- instance segmentation /

- template matching

-

表 1 小目标测试数据集上模型的性能

Table 1. Performance of models on small target test datasets

模型 IOU(0.75) IOU(0.85) IOU(0.95) IOU(0.50~0.95) Faster R-CNN 1.000 1.000 0.517 0.934 YOLO-V3 1.000 0.958 0.375 0.907 ResNet50-FPN 1.000 1.000 0.566 0.939 ResNet101-FPN 1.000 1.000 0.592 0.942 表 2 中等目标测试数据集上模型的性能

Table 2. Performance of models on medium target test datasets

模型 IOU(0.75) IOU(0.85) IOU(0.95) IOU(0.50~0.95) Faster R-CNN 1.000 1.000 0.583 0.936 YOLO-V3 1.000 0.950 0.483 0.929 ResNet50-FPN 1.000 1.000 0.633 0.953 ResNet101-FPN 1.000 1.000 0.617 0.944 表 3 大目标测试数据集上模型的性能

Table 3. Performance of models on large target test datasets

模型 IOU(0.75) IOU(0.85) IOU(0.95) IOU(0.50~0.95) Faster R-CNN 1.000 1.000 0.559 0.931 YOLO-V3 1.000 1.000 0.450 0.912 ResNet50-FPN 1.000 1.000 0.642 0.945 ResNet101-FPN 1.000 1.000 0.675 0.948 表 4 总测试数据集上模型的性能

Table 4. Performance of models on total test dataset

模型 IOU(0.85) IOU(0.95) IOU(0.50~0.95) 平均每帧检测时间/s Faster R-CNN 1.000 0.547 0.933 0.210 YOLO-V3 0.973 0.427 0.913 0.018 ResNet50-FPN 1.000 0.610 0.944 0.029 ResNet101-FPN 1.000 0.630 0.945 0.034 表 5 分割模型的定位精度与检测速度

Table 5. Location accuracies and detection speeds of segmentation model

数据集 IOU(0.75) IOU(0.85) IOU(0.95) IOU(0.50~0.95) 每帧检测时间/s 故障图像 1.000 1.000 0.352 0.936 0.031 正常图像 1.000 1.000 0.381 0.947 0.031 合集 1.000 1.000 0.359 0.948 0.031 表 6 分割模型掩码分割精度

Table 6. Mask segmentation accuracies of segmentation model

数据集 IOU(0.75) IOU(0.85) IOU(0.95) IOU(0.50~0.95) 故障图像 1.000 1.000 0.644 0.964 正常图像 1.000 1.000 0.667 0.968 合集 1.000 1.000 0.661 0.967 表 7 标准相关系数匹配测试结果

Table 7. Test results of standard correlation coefficient matching

项目 左弓角故障 右弓角故障 碳滑板故障 正常图像 样本数 40 40 40 40 识别数 40 39 40 38 误判数 0 1 0 2 表 8 模板匹配测试结果

Table 8. Test results of template matching

数据集 样本数 识别数 误检数 检测精度 每帧检测时间/s 故障数据 400 395 5 0.987 5 0.005 正常数据 400 393 7 0.982 5 0.005 表 9 受电弓故障视频数据中检测结果

Table 9. Detection results of pantograph fault video data

故障类型 数据量/帧 检出数/帧 故障正检数/帧 精度 召回率 整体故障 101 101 101 1.000 1.0 弓角故障 150 150 150 1.000 1.0 碳滑板故障 150 164 150 0.915 1.0 表 10 算法性能综合评估结果

Table 10. Comprehensive evaluation results of algorithm performance

视频大小/帧 故障数据/帧 检出数/帧 正检数/帧 精度 检出率 平均每帧检测时间/s 8 925 401 415 401 0.966 1.0 0.051 -

[1] 鲁小兵, 刘志刚, 宋洋, 等. 受电弓主动控制综述[J]. 交通运输工程学报, 2014, 14(2): 49-61. http://transport.chd.edu.cn/article/id/201402008LU Xiao-bing, LIU Zhi-gang, SONG Yang, et al. Review of pantograph active control[J]. Journal of Traffic and Transportation Engineering, 2014, 14(2): 49-61. (in Chinese) http://transport.chd.edu.cn/article/id/201402008 [2] 胡艳, 董丙杰, 黄海, 等. 碳滑板/接触线摩擦磨损性能[J]. 交通运输工程学报, 2016, 16(2): 56-63. doi: 10.19818/j.cnki.1671-1637.2016.02.007HU Yan, DONG Bing-jie, HUANG Hai, et al. Friction and wear behavior of carbon strip/contact wire[J]. Journal of Traffic and Transportation Engineering, 2016, 16(2): 56-63. (in Chinese) doi: 10.19818/j.cnki.1671-1637.2016.02.007 [3] 杨卢强, 韩通新, 王志良. 高速动车组受电弓安全检测的研究[J]. 铁道运输与经济, 2017, 39(8): 66-71. https://www.cnki.com.cn/Article/CJFDTOTAL-TDYS201708013.htmYANG Lu-qiang, HAN Tong-xin, WANG Zhi-liang. Study on safety detection of high-speed emu pantograph[J]. Railway Transport and Economy, 2017, 39(8): 66-71. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-TDYS201708013.htm [4] 杨红娟, 胡艳, 陈光雄. 受电弓滑板载流磨损机理演变过程试验研究[J]. 西南交通大学学报, 2015, 50(1): 77-83. https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT201501013.htmYANG Hong-juan, HU Yan, CHEN Guang-xiong. Experimental study on evolution of wear mechanism of contact strip with electric current[J]. Journal of Southwest Jiaotong University, 2015, 50(1): 77-83. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT201501013.htm [5] 魏秀琨, 所达, 魏德华, 等. 机器视觉在轨道交通系统状态检测中的应用综述[J]. 控制与决策, 2021, 36(2): 257-282. https://www.cnki.com.cn/Article/CJFDTOTAL-KZYC202102001.htmWEI Xiu-kun, SUO Da, WEI De-hua, et al. A survey of the application of machine vision in rail transit system inspection[J]. Control and Decision, 2021, 36(2): 257-282. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-KZYC202102001.htm [6] 周宁, 杨文杰, 刘久锐, 等. 基于受电弓状态感知的弓网安全监测系统研究与探讨[J]. 中国科学: 技术科学, 2021, 51(1): 23-34. https://www.cnki.com.cn/Article/CJFDTOTAL-JEXK202101002.htmZHOU Ning, YANG Wen-jie, LlU Jiu-rui, et al. Investigation of a pantograph-catenary monitoring system using condition-based pantograph recognition[J]. Scientia Sinica: Technologica, 2021, 51(1): 23-34. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-JEXK202101002.htm [7] 韩志伟, 刘志刚, 张桂南, 等. 非接触式弓网图像检测技术研究综述[J]. 铁道学报, 2013, 35(6): 40-47. https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201306009.htmHAN Zhi-wei, LIU Zhi-gang, ZHANG Gui-nan, et al. Overview of non-contact image detection technology for pantograph-catenary monitoring[J]. Journal of the China Railway Society, 2013, 35(6): 40-47. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201306009.htm [8] GAO Shi-bin. Automatic detection and monitoring system of pantograph-catenary in China's high-speed railways[J]. IEEE Transactions on Instrumentation and Measurement, 2021, 70: 3502012. [9] 施莹, 林建辉, 庄哲, 等. 基于振动信号时频分解-样本熵的受电弓裂纹故障诊断[J]. 振动与冲击, 2019, 38(8): 180-187. https://www.cnki.com.cn/Article/CJFDTOTAL-ZDCJ201908027.htmSHI Ying, LIN Jian-hui, ZHUANG Zhe, et al. Fault diagnosis for pantograph cracks based on time-frequency decomposition and sample entropy of vibration signals[J]. Journal of Vibration and Shock, 2019, 38(8): 180-187. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-ZDCJ201908027.htm [10] 彭威, 贺德强, 苗剑, 等. 弓网状态监测与故障诊断方法研究[J]. 广西大学学报(自然科学版), 2011, 36(5): 718-722. https://www.cnki.com.cn/Article/CJFDTOTAL-GXKZ201105005.htmPENG Wei, HE De-qiang, MIAO Jian, et al. Research on the real-time monitoring and fault diagnosis method of pantograph-catenary[J]. Journal of Guangxi University (Natural Science Edition), 2011, 36(5): 718-722. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-GXKZ201105005.htm [11] 姚兰, 肖建. 基于模糊熵和Hough变换的受电弓滑板裂纹检测方法[J]. 铁道学报, 2014, 36(5): 58-63. https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201405015.htmYAO Lan, XIAO Jian. Pantograph slide cracks detection method based on fuzzy entropy and Hough transform[J]. Journal of the China Railway Society, 2014, 36(5): 58-63. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201405015.htm [12] 冯倩, 陈维荣, 王云龙, 等. 受电弓滑板磨耗测量算法的研究[J]. 铁道学报, 2010, 32(1): 109-113. https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201001021.htmFENG Qian, CHEN Wei-rong, WANG Yun-long, et al. Research on the algorithm to measure the pantographic slipper abrasion[J]. Journal of the China Railway Society, 2010, 32(1): 109-113. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-TDXB201001021.htm [13] 陈维荣, 冯倩, 张健, 等. 受电弓滑板状态监测的图像目标提取[J]. 西南交通大学学报, 2010, 45(1): 59-64. https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT201001011.htmCHEN Wei-rong, FENG Qian, ZHANG Jian, et al. Image object detection in monitoring of pantograph slippers[J]. Journal of Southwest Jiaotong University, 2010, 45(1): 59-64. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-XNJT201001011.htm [14] 伍川辉, 任继炜, 廖家, 等. 基于多视觉传感器的受电弓滑板磨耗检测系统设计[J]. 仪表技术与传感器, 2021(11): 78-82, 87. https://www.cnki.com.cn/Article/CJFDTOTAL-YBJS202111016.htmWU Chuan-hui, REN Ji-wei, LIAO Jia, et al. Design of wear detection system of pantograph slide plate based on multi-vision sensor[J]. Instrument Technique and Sensor, 2021(11): 78-82, 87. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-YBJS202111016.htm [15] 吕文阁, 刘少册, 郑云龙, 等. 基于线结构激光的侧轨受电弓磨耗视觉测量系统开发[J]. 机车电传动, 2017(2): 114-117. https://www.cnki.com.cn/Article/CJFDTOTAL-JCDC201702038.htmLYU Wen-ge. LIU Shao-ce. ZHENG Yun-long, et al. Development of vision measurement system of side rail pantograph slide wear based on line structure laser[J]. Electric Drive for Locomotives, 2017(2): 114-117. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-JCDC201702038.htm [16] 陈双. 基于图像处理的受电弓故障检测算法研究[D]. 南京: 南京理工大学, 2017.CHEN Shuang. Research on pantograph fault detection algorithm based on image processing[D]. Nanjing: Nanjing University of Science and Technology, 2017. (in Chinese) [17] 胡雪冰. 基于图像处理的受电弓故障在线检测系统研究[D]. 南京: 南京理工大学, 2019.HU Xue-bing. Research on pantograph fault online detection system based on image processing[D]. Nanjing: Nanjing University of Science and Technology, 2019. (in Chinese) [18] WEI Xiu-kun, JIANG Si-yang, LI Yan, et al. Defect detection of pantograph slide based on deep learning and image processing technology[J]. IEEE Transactions on Intelligent Transportation Systems, 2020, 21(3): 947-958. [19] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[C]//ICLR. The 3rd International Conference on Learning Representations. San Diego: ICLR, 2015: 1-14. [20] KRIZHEVSKY A, SUTSKEVER I, HINTON G E. ImageNet classification with deep convolutional neural networks[J]. Communications of the ACM, 2012, 60(6): 1097-1105. [21] 冯勇, 宋天源, 钱学明. 基于深度学习的高铁受电装置安全状态快速检测方法[J]. 西安交通大学学报, 2019, 53(10): 109-114. https://www.cnki.com.cn/Article/CJFDTOTAL-XAJT201910015.htmFENG Yong, SONG Tian-yuan, QIAN Xue-ming. A fast detection method for safety states of power receiving device on high-speed rail based on deep learning[J]. Journal of Xi'an Jiaotong University, 2019, 53(10): 109-114. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-XAJT201910015.htm [22] REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: unified, real-time object detection[C]//IEEE. 2016 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2016: 779-788. [23] REDMON J, FARHADI A. YOLO9000: better, faster, stronger[C]//IEEE. 2017 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2017: 6517-6525. [24] 寇皓为, 苏燕辰, 李恒奎, 等. 深度学习在受电弓弓头故障检测与分类中的应用[J]. 激光杂志, 2022, 43(6): 53-58. https://www.cnki.com.cn/Article/CJFDTOTAL-JGZZ202206010.htmKOU Hao-wei, SU Yan-chen, LI Heng-kui, et al. Application of deep learning in the detection and classification of pantograph head fault[J]. Laser Journal, 2022, 43(6): 53-58. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-JGZZ202206010.htm [25] BOCHKOVSKIY A, WANG C Y, LIAO H Y M. YOLOv4: optimal speed and accuracy of object detection[C]//IEEE. 2020 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2020: 580-597. [26] REN Shao-qing, HE Kai-ming, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. [27] HE Kai-ming, ZHANG Xiang-yu, REN Shao-qing, et al. Deep residual learning for image recognition[C]//IEEE. 2016 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2016: 770-778. [28] LIN T Y, DOLLAR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//IEEE. 2017 IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2017: 936-944. [29] SHELHAMER E, LONG J, DARRELL T. Fully convolutional networks for semantic segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(4): 640-651. [30] HE Kai-ming, GKIOXARI G, DOLLÁR P, et al. Mask R-CNN[C]//IEEE. 2017 IEEE International Conference on Computer Vision. New York: IEEE, 2017: 2980-2988. -

下载:

下载: